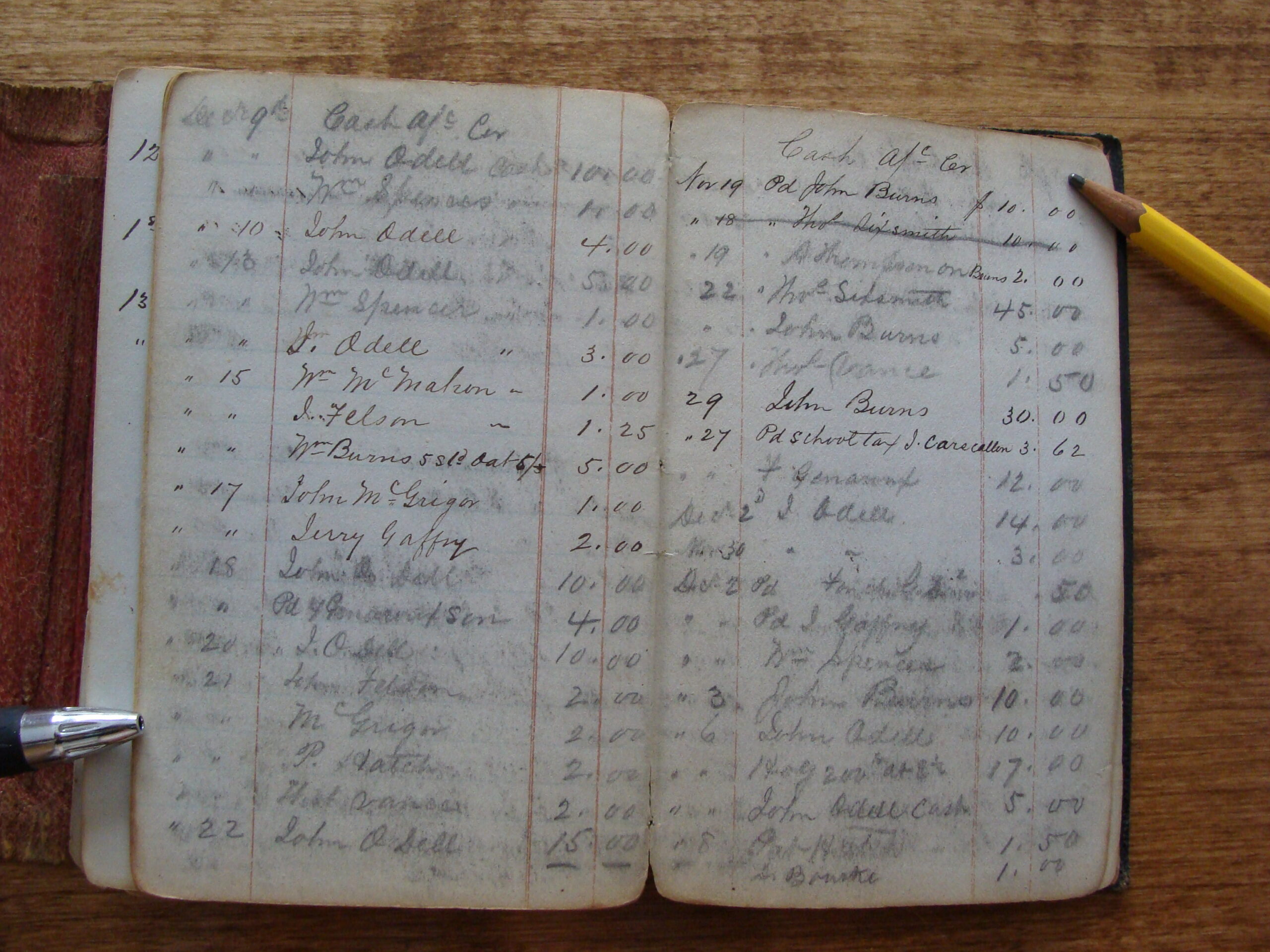

This year’s FOSS4G had many interesting talks but one presentation in particular, caught my attention: “Software Comes and Goes, Mind the Data!” by Arnulf Christl. The 140 character summary of this talk is: Data, even as it ages, remains valuable and ought to be considered a “historic document”. In contrast, software becomes obsolete and dies.

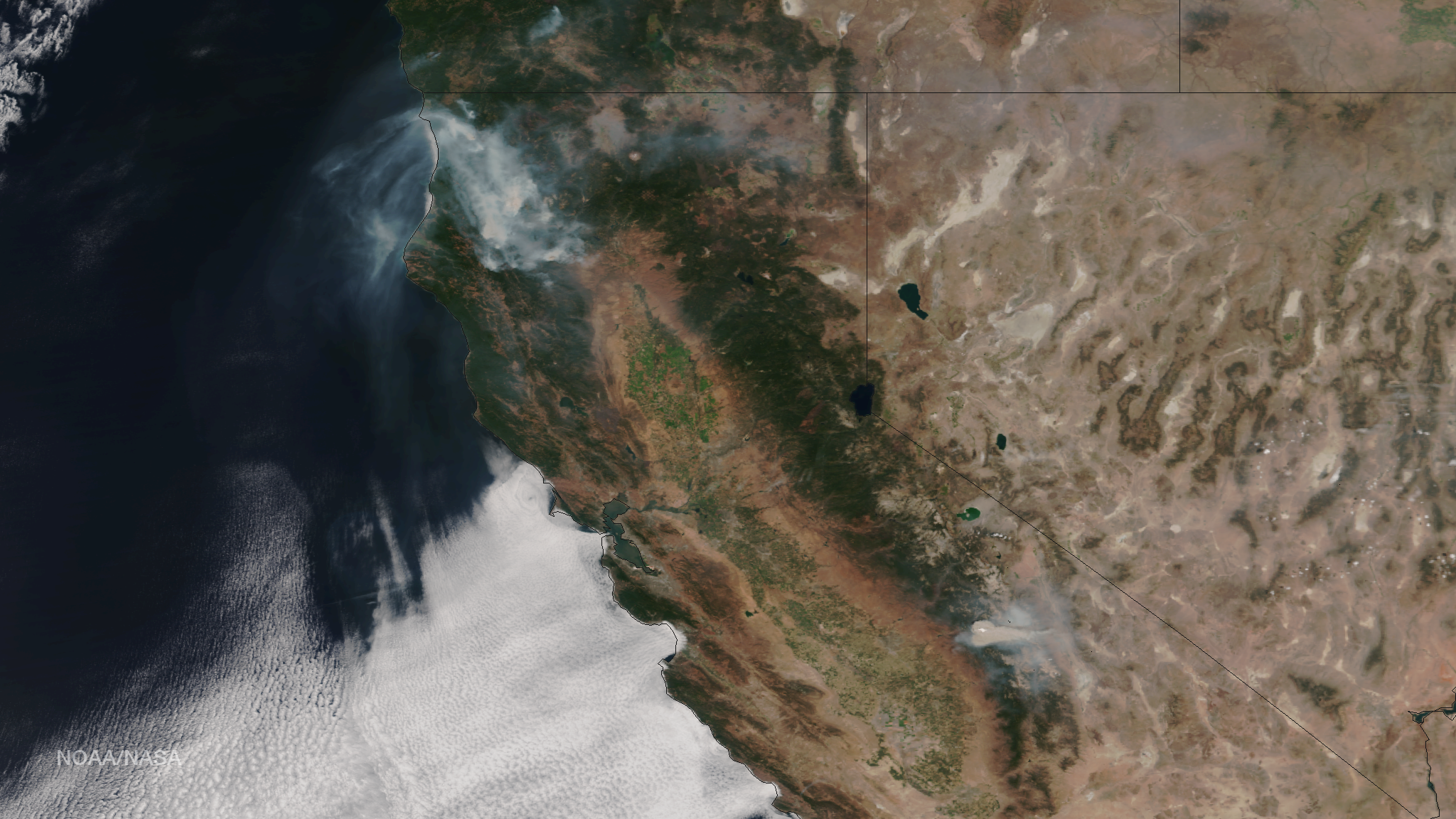

The talk offered some interesting questions and amusing analogies and the overall take-away is that as geospatial experts we should ensure that our data can survive the software that we use to create, edit, and interact with it. That’s great advice! But being aware is not the same as actually solving the problem. How can we actually achieve data longevity? And in particular how can we achieve this for cultural heritage inventory and asset management?

The Scope of Data

If you’re concerned with data longevity, it’s tempting to jump to the conclusion that the answer is simply a metadata and data format issue. After all, if I can export my dataset and a little information about its content to a well-known and open format, I ought to be able to import it into any software application that I like.

Unfortunately, the reality is a bit more complicated. Getting a data dump from most enterprise applications, even with metadata and open formats, doesn’t necessarily make it usable. Why? Because software invariably represents the complexity of the real world in a simplified way. This is done as soon as the software developer identifies the goals of an application and its user community. So, to ensure that we can continue to use our data even when we move on to new software, we’ll need to understand the scope of the data.

Scope of Dataset = Data Model

The scope of a dataset is simple: what does the dataset represent, and what level of detail does it support. If you’re a technologist, the scope of a dataset can be thought of as the data model. Consider a data collection application designed to build an inventory of cultural heritage. The scope of the dataset is the answer to the question: “What did the software developers include in their definition of cultural heritage?”.

Understanding the scope of a dataset is important because it helps us interpret the contents of the data. Let’s return to our database of cultural heritage and look at a common data element: address. In cultural heritage, this single term might mean:

- The location of a building (e.g., 601 Montgomery St, San Francisco, CA 94111)

- A contact point for a person (e.g., john.smith@paveprime.com)

- The location of a web page (e.g., www.getty.edu)

- A speech (e.g., The Gettysburg Address)

If our data is to survive the software used to create it, we need to understand exactly which of these possible addresses are represented in our dataset.

CIDCO CRM for Information Interchange to Preserve Cultural Heritage

In Arches Cultural Heritage software, we use the CIDCO CRM to define precisely the scope of our data. So, if we mean Address (the location of a building), we use the CRM’s E45 Address Class. If we mean Address as a contact point for a person, we use the E51 Contact Point Class. And for a speech, we could use the E33 Linguist Object to refer to the written text of the speech. We leave it to the reader to identify the appropriate CRM class for the oral presentation of the speech.

Because the CIDOC CRM is an international standard, anyone who gets an export of Arches will know exactly what entity we are representing with the word “Address”. This includes computers. By referencing our data models to the CIDOC ontology, Arches data can be used by natural language processors or other machine learning algorithms to better understand the scope of an Arches dataset.

Describing the Data

Defining the scope of our dataset is a key step to preserving data. But there’s still one more thing we need to consider if we really want our data to outlive our software. We need to define the terms we use for our data attributes.

Let’s again consider a cultural heritage dataset. Suppose our dataset includes a data element for the age or period of a heritage object. And let’s suppose that we use the term “Early Iron Age” to describe the period for an axe. Depending on where you live and where the axe was found, “Early Iron Age” can have many different meanings. In Britain, the Early Iron Age is taken to start at 2,800 years ago, and it ended 2301 years ago. On the other hand, in Bulgaria “Early Iron Age” can mean a start date of 3000 years ago, ending 2500 years ago.

Different meanings for the same terms

So, how do we reconcile differing meanings for the same terms? In Arches, we use a thesaurus to organize our list of terms. This allows Arches to define the precise meaning and scope for every value in every dropdown in every data entry form.

This means that Arches can reference international standards for terminology. In our Early Iron Age example, we could use PeriodO as our reference for cultural periods. Other well know standards, such as the Getty Art & Architecture Thesaurus (AAT) could be used to define other terms in our dataset. This would allow someone getting an Arches dataset to unequivocally understand what we mean when we use a term to describe a cultural heritage object by simply referencing the appropriate standard.

Arches: Preserving Data for Cultural Heritage

With Arches, we’ve tried to build in data longevity into the software. For us, that means exporting the full data schema (and the semantics to understand it). It also means exporting the definition of the terms that we use to describe an individual cultural heritage object.